This fall, thousands of student hackers learned cybersecurity skills in a Capture the Flag (CTF) challenge inspired by Watch_Dogs® 2. Watch_Dogs® 2 is a brand new game from Ubisoft that follows the story of Marcus Holloway and fellow blackhat hackers in DedSec who use their skills to take down the evil Blume Corporation and their ctOS 2.0 surveillance system.

You can gain access to 3 hours of Watch_Dogs® 2 gameplay on your PlayStation 4 system or Xbox One and experience the game that IGN calls “A Blast to Play!”. Just click on the version you want to play! The FREE three-hour trial of the full game will be available for download starting January 17 on PS4 and January 24 on Xbox One.

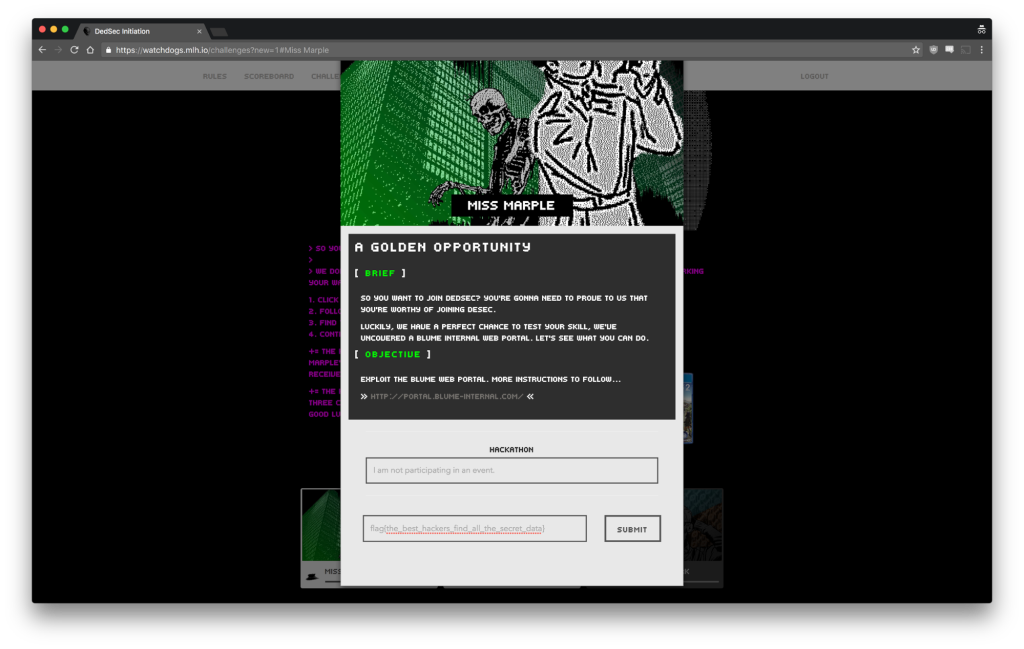

At each North American hackathon in the Fall 2016 semester, we held a mini-event that brought together huge groups of hackers to try the first level of the Watch_Dogs® 2 CTF. Our CTF game is an immersive story where students can learn security skills by detecting and exploiting common software vulnerabilities in a simulated environment. The fastest time at each hackathon won a copy of the game upon release. After the mini-event, hackers could try Levels 2 and 3 on their own to compete for the Grand Prize.

By having the fastest aggregate time to complete all three levels, Hidehiro Anto became our Grand Prize winner. He won a trip to Montreal to tour Ubisoft’s HQ and meet the developers who created Watch_Dogs® 2. Hidehiro studies Physics at UCLA and has been participating in CTF challenges since high school. Though he is not a programmer by training, he has built some cool hacks like a homemade word processor and participated in security and bug bounty programs in the past.

He solved the Level 3 challenge using the command-line utility curl, but you’ll have to stay tuned for future tutorials on how to solve it yourself! This post is the first in a series of three that will cover solutions to each level of the CTF.

If you haven’t yet had a chance to try out the challenges, you can still head over to https://watchdogs.mlh.io/ and log in with MyMLH to give it a shot before reading the spoilers below.

It is important to note that some of the solutions will seem like a large logical leap. The truth is that when people are trying to break into a piece of software or test it for security issues, they test common vulnerabilities first and keep poking at increasingly complex pieces of the software until something works and gives them the information they are looking for. It is often a long process of trial and error. Once you get to more advanced levels, it is common to use an automated security testing suite which performs the tests for you and runs through lists of common problems.

What this means for your purposes is that some of the security issues you will be exploiting in this CTF game are simply well-known flaws that you will become more aware of as you gain more experience in securing your software. They don’t seem obvious now, but once you’ve solved the challenge you will always think to look for them in the future.

Level 1: Miss Marple

The first level of the CTF is a fairly simple exploit designed to teach introductory cybersecurity thinking.

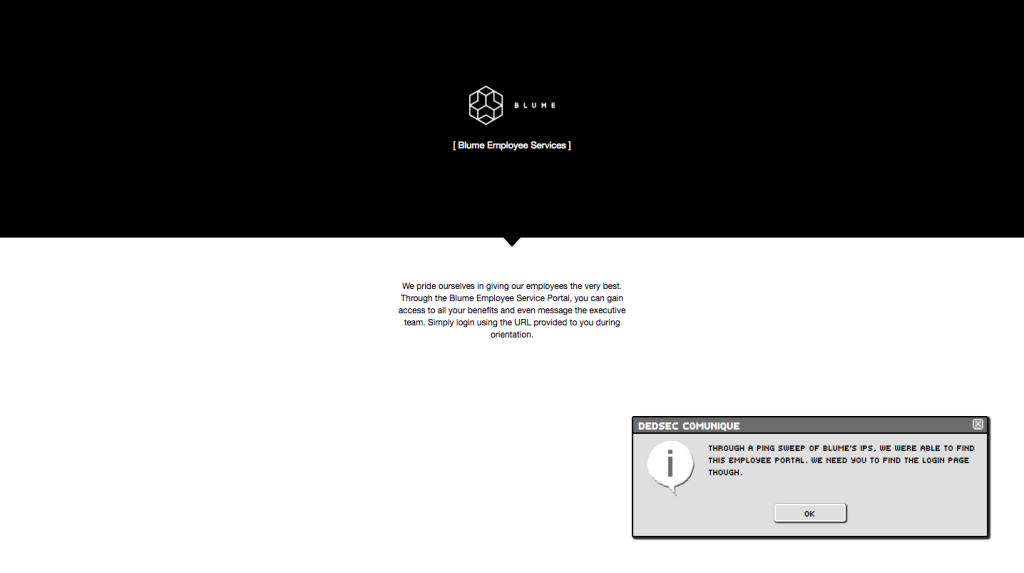

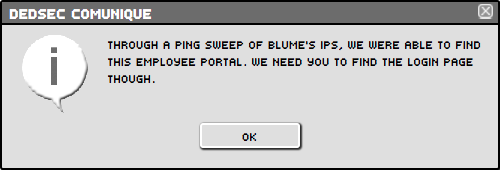

You land at a Blume Employee Services portal with a few hints about how to proceed. The most important detail here is that there is a file on the server that might tell you the page’s location.

As you think through this hint, you’ll realize that there are a number of ways to find a list of page locations on a typical website. It could be a sitemap, or perhaps the source of the page you’re already on, a clever wget command, or even a Google search for the site’s domain.

In this case, the answer is the robots.txt file.

robots.txt is a file that provides some basic configuration instructions for how search engines should crawl your website. You could tell Google not to crawl certain pages or you could provide a specific sitemap to the search engine that is crawling your website. You can learn more about robots.txt here.

robots.txt imposes no actual technical barriers, it really is more like a set of suggestions similar to putting a “Do Not Enter” sign on an unlocked door. Polite search engines with good behaviors should respect the instructions in robots.txt, but anyone has the ability to go to the pages referenced there unless they are protected in more secure ways such as a password or IP filtering.

“As a first test, simply extending the URL with the ‘robots.txt’ filename could lead us to it.

The robots.txt file is indeed found there, at http://portal.blume-internal.com/robots.txt and includes the following instructions:

User-agent: *

Disallow: /employee-login-426C756D65.html

What would someone do who is trying to break into this server? They would go to the Disallowed page, of course!

When you go to that page, you receive another hint and a Generator Key. The hint tells you that there is an encoded Generator Key and implies that you need to figure out how it was encoded.

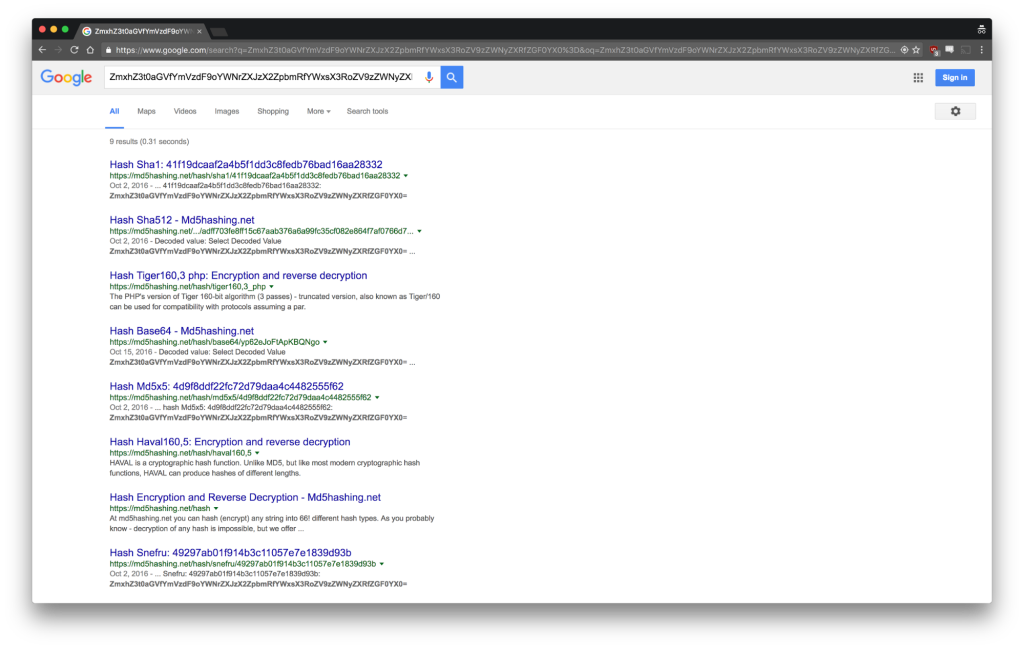

Unless you know different hash algorithms by sight alone, you may have to do some Googling around. If you paste the Generator Key (ZmxhZ3t0aGVfYmVzdF9oYWNrZXJzX2ZpbmRfYWxsX3RoZV9zZWNyZXRfZGF0YX0=) into Google, the results actually give you a few good hints despite the specific websites being unrelated to the challenge.

They reference things like sha512 or base64 or md5. This suggests that you should try to decrypt the Key with those algorithms. Running the Key through an md5 and sha512 decrypter doesn’t do anything, since the Key is not actually encrypted using those algorithms.

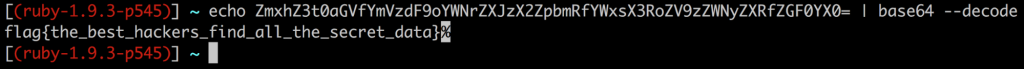

However, if you run the Key through a base64 decoder (like I did on the command line above or on a base64 decoder tool you find on the internet) your output will be the flag for Level 1.

You might be thinking to yourself that this is a silly example that would never happen in reality, but even basic security breaches like this happen frequently. Here is one such example of people exploiting the assumed security of a robots.txt file to find private, unprotected information on a website.

If you want to look at a well-done robots.txt file, check out the one used by Hacker News at https://news.ycombinator.com/robots.txt – they ask search engines not to index the URLs in their file but if you try to go to any of them they are actually secured by login requirements.

Hooked and want to learn more? Checkout our Level 2 Tutorial!